Contents

One of the critical assumptions of multiple linear regression is that there should be no autocorrelation in the data. When the residuals are dependent on each other, there is autocorrelation. This factor is visible in the case of stock prices when the price of a stock is not independent of its previous one. No or low autocorrelation is the second assumption in assumptions of linear regression. The linear regression analysis requires that there is little or no autocorrelation in the data. Autocorrelation occurs when the residuals are not independent of each other.

The first assumption of simple linear regression is that the two variables in question should have a linear relationship. So we fit a linear regression model and see that the errors are of the same variance throughout. The graph in the below image has Carpet Area in the X-axis and Price in the Y-axis.

In a simple linear regression mannequin, there is just one unbiased variable and hence, by default, this assumption will hold true. However, within the case of a number of linear regression fashions, there are a couple of impartial variable. In the above model the regression coefficients are same and the only difference is observed in the standard errors and thus the respective t-values. The robust standard error are greater than the standard errors of the original model therefore we can state that in presence of hetroscedasticity the standard error of the coefficients were underestimated.

Testing for Heteroscedasticity

The heteroscedasticity process can be a function of one or more of your independent variables using the White test. It’s comparable to the Breusch-Pagan test, the only difference being that the White test allows for a nonlinear and interactive influence of the independent variable on the error variance. This assumption https://1investing.in/ of the classical linear regression model entails that the variation of the error term should be consistent for all observations. Plotting the residuals versus fitted value graph enables us to check out this assumption. This yields a listing of errors squared, which is then summed and equals the unexplained variance.

It is very probably that the regression suffers from multi-collinearity. If the variable is not that important intuitively, then dropping that variable or any of the correlated variables can repair the issue. The Assumption of Normality of Errors – If error phrases are not normal, then the usual errors of OLS estimates received’t be dependable, which means the confidence intervals would be too wide or slender. The assumption of homoscedasticity (that means “identical variance”) is central to linear regression fashions.

Why is Heteroscedasticity bad?

This test is for the goodness of fit test of whether the sample data have skewness and kurtosis matching to normal distribution or not. With the help of using statsmodels.api library we check the linearity of regression. So when these weights are squared, the square of small weights underestimates the effect of high variance. Now with the above reasons, the Heteroscedasticity can either be Pure or Impure. When we fit the right model (linear or non-linear) and if yet there is a visible pattern in the residuals then it is called Pure Heteroscedasticity. Several modifications of the White method of computing heteroscedasticity-consistent commonplace errors have been proposed as corrections with superior finite sample properties.

- Similarly, there could be students with lesser scores in spite of sleeping for lesser time.

- And as the carpet area increases, the variance in the predictions increase which results in increasing value of error or residual terms.

- With a multiple regression made up of a number of unbiased variables, the R-Squared should be adjusted.

- However, within the case of a number of linear regression fashions, there are a couple of impartial variable.

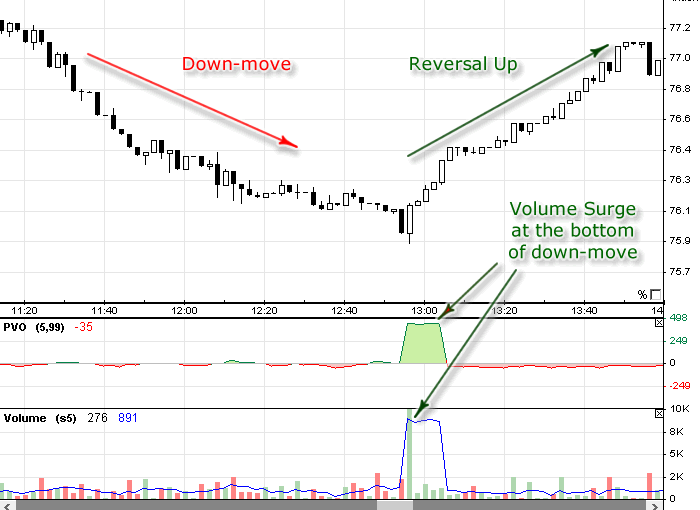

Specifically, heteroscedasticity is a scientific change in the spread of the residuals over the range of measured values. Finally, the fifth assumption of a classical linear regression model is that there should be homoscedasticity among the data. The scatterplot graph is again the ideal way to determine the homoscedasticity. the error term is said to be homoscedastic if The data is said to homoscedastic when the residuals are equal across the line of regression. One of the assumptions of the classical linear regression model is that there isn’t a heteroscedasticity. Biased commonplace errors result in biased inference, so outcomes of hypothesis checks are presumably mistaken.

This will help reduce the variance as quite obviously the number of infections in cities with a large population will be large. Now the first step would be to identify the source of Heteroscedasticity. Presence of Heteroscedasticity makes the coefficients less precise and hence the correct coefficients are further away from the population value. However, if we fit the wrong model and then observe a pattern in the residuals then it is a case of Impure Heteroscedasticity. Depending on the type of Heteroscedasticity the measures need to be taken to overcome it.

Graphical depiction of results from heteroscedasticity test in STATA

Various tests help detect heteroscedasticities such as the Breusch-Pagan test and the White test. Here are some cases of assumptions of linear regression in situations that you experience in real life. Now, that you know what constitutes a linear regression, we shall go into the assumptions of linear regression.

When this condition is violated, it means there is Heteroscedasticity in the model. Considering the same example as above, let’s say that for houses with lesser carpet area the errors or residuals or very small. And as the carpet area increases, the variance in the predictions increase which results in increasing value of error or residual terms. When we plot the values again we see the typical Cone curve which strongly indicates the presence of Heteroscedsticity in the model.

Assumptions of Classical Linear Regression Model

In case there is a correlation between the independent variable and the error term, it becomes easy to predict the error term. It violates the principle that the error term represents an unpredictable random error. Therefore, all the independent variables should not correlate with the error term.

Thus, this assumption of simple linear regression holds good in the example. It is possible to check the assumption using a histogram or a Q-Q plot. You define a statistical relationship when there is no such formula to determine the relationship between two variables.

The Assumption of Homoscedasticity – If errors are heteroscedastic (i.e. OLS assumption is violated), then will probably be difficult to belief the standard errors of the OLS estimates. The assumption of homoscedasticity (meaning “same variance”) is central to linear regression models. Heteroscedasticity is present when the size of the error term differs across values of an independent variable. When the two variables move in a fixed proportion, it is referred to as a perfect correlation.

Sometimes errors usually are not normal as a result of the linearity assumption just isn’t holding. So, it is worthwhile to examine for linearity assumption again if this assumption fails. Linear regression is a straight line that attempts to predict any relationship between two points. However, the prediction should be more on a statistical relationship and not a deterministic one. If the two variables under consideration are x and y, the correlation coefficient can be determined using the formula.

Typically, if the info set is giant, then errors are kind of homoscedastic. This assumption states that the errors are usually distributed, conditional upon the unbiased variables. This OLS assumption just isn’t required for the validity of OLS technique; nevertheless, it becomes important when one needs to outline some extra finite-pattern properties. In such a scenario, it is better to drop one of many three unbiased variables from the linear regression mannequin.